- Home

- VMware Certifications

- 3V0-624 VMware Certified Advanced Professional 6.5 - Data Center Virtualization Design Dumps

Pass VMware 3V0-624 Exam in First Attempt Guaranteed!

3V0-624 Premium File

- Premium File 60 Questions & Answers. Last Update: Jan 24, 2026

Whats Included:

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Last Week Results!

All VMware 3V0-624 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the 3V0-624 VMware Certified Advanced Professional 6.5 - Data Center Virtualization Design practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Complete VMware 3V0-624 Certification Preparation Handbook

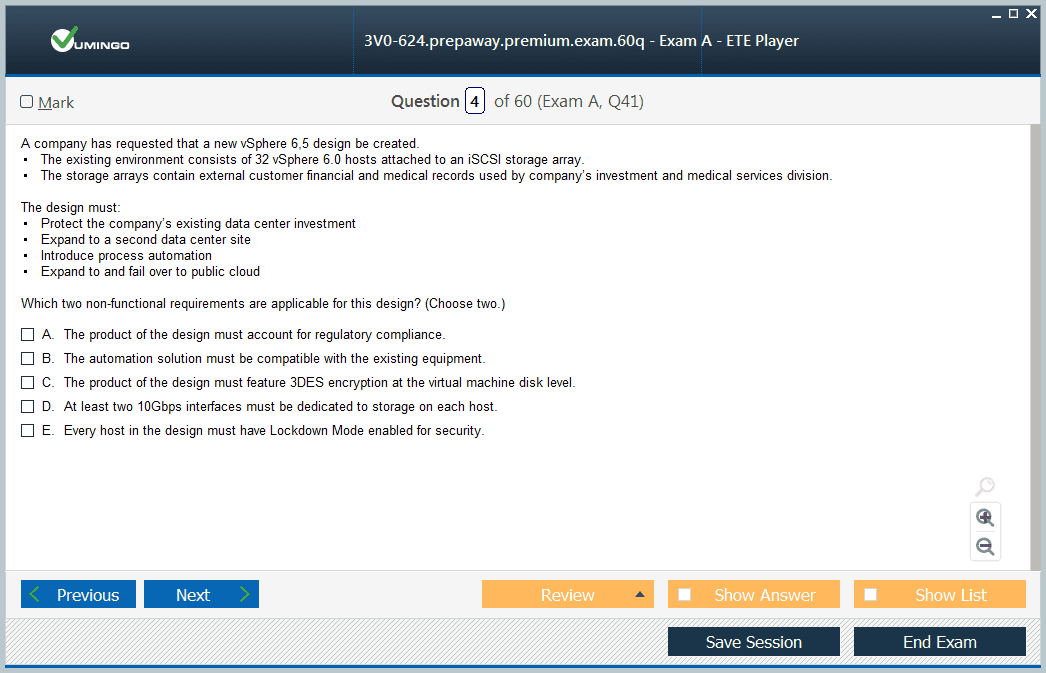

The 3V0-624 exam focuses on evaluating a professional’s ability to design a VMware vSphere datacenter that aligns with business objectives while maintaining performance, scalability, and reliability. It tests how well a candidate can interpret complex requirements, translate them into architectural components, and justify design decisions with technical reasoning. The exam is not just about theoretical understanding but also about the application of architectural principles in practical, enterprise-level environments. Candidates are expected to think like architects who balance business priorities, risk factors, and technical constraints to create solutions that are efficient, resilient, and compliant.

The exam structure typically involves scenario-based questions that simulate real-world challenges. Each scenario provides contextual details about an organization’s needs, infrastructure constraints, and future goals. The candidate must analyze the information carefully to identify functional and non-functional requirements, assess risks, and design solutions that address both technical and business dimensions. This analytical approach is what differentiates the exam from operational or administrative certifications.

Understanding Design Fundamentals

A successful datacenter design begins with a clear understanding of what the business aims to achieve. The architect must capture requirements through discussions with stakeholders, document them systematically, and translate them into logical and physical components. Functional requirements define the core tasks the system must perform, such as high availability, resource management, and fault tolerance. Non-functional requirements, on the other hand, relate to performance, scalability, manageability, and compliance. Understanding how these requirements differ is critical to structuring a design that meets all objectives.

Another essential aspect of design is recognizing constraints, assumptions, and risks. Constraints are factors that limit design choices, such as hardware budgets or existing legacy systems. Assumptions are conditions believed to be true for the purpose of design but may need validation later. Risks represent potential threats to success, such as single points of failure or scalability limitations. The ability to correctly identify and categorize these factors determines the stability and success of the overall design.

Architectural Design Process

The architectural process begins with conceptual design, where the architect defines the scope, objectives, and high-level components of the environment. This phase establishes what needs to be built and how it aligns with the business vision. Logical design follows, focusing on relationships between components such as clusters, networks, and storage systems. This step ensures that each component supports the defined requirements without conflict or dependency issues. The final stage is the physical design, where the architect determines specific configurations, such as hardware models, network topology, and storage tiers.

Scalability and availability must be built into the design from the start. The architecture should support the organization’s growth without significant redesign. Resource pools, distributed switches, and cluster designs should accommodate future expansion seamlessly. Similarly, redundancy should be integrated into key areas such as management servers, storage arrays, and network paths to ensure that operations continue even when individual components fail.

Performance optimization is another crucial design consideration. Proper allocation of CPU, memory, and storage resources ensures that workloads operate efficiently. The architect must balance workloads across clusters to prevent resource contention and design policies that adapt dynamically to workload changes. Capacity planning tools and monitoring frameworks should be incorporated to forecast future needs and maintain consistent performance.

Logical and Physical Design Alignment

The logical design defines the abstract relationships between components without committing to specific configurations. It represents how systems will interact, communicate, and support business functions. The physical design then implements these relationships in concrete form, specifying the hardware and network architecture required to achieve them. For instance, the logical design may define a need for redundant storage connectivity, while the physical design specifies dual network interfaces and multipathing configurations to support it.

Consistency between the logical and physical layers is crucial. Misalignment between them can cause operational inefficiencies, performance degradation, or even downtime. The architect must validate that each logical component has a corresponding physical implementation and that dependencies are managed properly. Documentation of these mappings ensures clarity for deployment teams and simplifies maintenance.

Risk Management and Mitigation

Every design decision involves trade-offs, and with trade-offs come risks. Effective risk management is therefore an integral part of the design process. Risks can stem from technical limitations, human factors, or environmental dependencies. For instance, a decision to centralize management services may improve control but increase dependency on a single site. Identifying these risks early allows the architect to design mitigation strategies such as failover mechanisms, redundant systems, or disaster recovery plans.

A thorough risk assessment ensures that the design remains resilient under varying conditions. Mitigation measures like backup strategies, high availability clustering, and load balancing protect the environment from disruptions. Periodic risk reviews during and after implementation help maintain system integrity as the environment evolves.

Integration and Interoperability

Datacenter environments rarely exist in isolation; they interact with numerous systems and services. Integration and interoperability are therefore key considerations in design. The architect must ensure that the datacenter integrates seamlessly with directory services, security frameworks, monitoring tools, and third-party management systems. This interoperability enables consistent operations and unified visibility across the infrastructure.

Network integration is particularly critical. Designing for redundancy, segmentation, and security ensures optimal data flow and protection. Storage integration requires careful planning to support both performance and capacity requirements. Compatibility between components—such as firmware versions, drivers, and hypervisor builds—must be verified to avoid conflicts and instability.

Automation plays a vital role in managing integrated systems. By incorporating orchestration tools and automated provisioning processes, the architect can enhance consistency and reduce manual errors. Automation also supports compliance by ensuring that configurations adhere to organizational standards every time a new resource is deployed.

Documentation and Validation

Comprehensive documentation is essential to communicate design intent and implementation steps clearly. Each architectural decision must be recorded along with the rationale behind it. This documentation serves as a reference for future maintenance, audits, and upgrades. Diagrams illustrating logical and physical layouts, resource relationships, and data flows help stakeholders visualize the environment and understand dependencies.

Validation ensures that the design functions as intended. This process involves testing the architecture against defined requirements to confirm performance, scalability, and resilience. Validation should be performed both before and after deployment. Simulation and pilot testing identify potential weaknesses that can be addressed before full implementation. Continuous validation ensures that the environment remains aligned with changing business needs and technology updates.

Stakeholder Communication and Decision Justification

Designing a datacenter involves balancing the perspectives of multiple stakeholders, each with different priorities. Executives focus on business value, cost efficiency, and risk management, while technical teams prioritize performance, availability, and manageability. The architect acts as the bridge between these perspectives, ensuring that technical decisions support business outcomes.

Effective communication is key to stakeholder alignment. Each design recommendation should be backed by clear reasoning that demonstrates its value. Presenting trade-offs transparently helps stakeholders understand the implications of different choices. For instance, explaining how a specific storage architecture enhances performance but increases cost allows informed decision-making.

Security and Compliance in Design

Security must be embedded into every stage of the design. This involves implementing layered controls that protect against unauthorized access, data breaches, and configuration drift. Identity and access management ensures that users have appropriate privileges based on their roles. Network segmentation and micro-segmentation isolate workloads, minimizing exposure in case of breaches.

Data protection is equally important. Encryption should be applied to data both in transit and at rest. Backup and replication strategies ensure that critical information can be recovered quickly in case of loss. Security monitoring tools should be integrated with automation systems to provide real-time alerts and automated responses to potential threats.

Compliance frameworks dictate standards that must be followed to meet regulatory or organizational requirements. By incorporating compliance checks into automation workflows, architects can ensure consistent adherence without manual oversight. Periodic reviews keep the environment aligned with evolving policies and best practices.

Performance and Capacity Planning

Designing for performance requires a deep understanding of workload characteristics. The architect must analyze usage patterns, identify bottlenecks, and allocate resources accordingly. Proper sizing of CPU, memory, storage, and network bandwidth prevents oversubscription and maintains optimal performance under varying loads.

Capacity planning ensures that the datacenter can scale efficiently as demand grows. Predictive analysis tools help forecast future requirements based on current trends. Planning for headroom ensures that the environment can handle unexpected spikes in demand without degradation. This proactive approach minimizes the need for emergency upgrades and keeps operations stable.

Performance optimization also involves tuning configurations based on real-time monitoring data. Continuous feedback loops allow administrators to adjust resource allocations dynamically, ensuring sustained efficiency.

Continuous Improvement and Lifecycle Management

Designing a datacenter is not a one-time effort but a continuous process. As business needs evolve, the architecture must adapt to new technologies, workload types, and performance expectations. Lifecycle management ensures that the environment remains current, secure, and aligned with objectives.

Regular assessments help identify areas for improvement. Upgrading components, refining automation workflows, and optimizing configurations maintain long-term stability. Documentation should be updated with every change to preserve design integrity.

Adopting a mindset of continuous improvement ensures that the datacenter evolves alongside business strategy. This proactive approach minimizes technical debt, enhances reliability, and supports innovation.

The 3V0-624 exam represents a mastery of datacenter design principles, emphasizing analytical thinking, structured methodology, and strategic alignment. It evaluates not only technical proficiency but also the ability to apply architectural reasoning to solve complex challenges. Preparing for this exam involves developing a deep understanding of VMware infrastructure, learning how to classify requirements, and practicing design justification.

Success in this exam signifies that an individual can architect solutions that are efficient, secure, and scalable. It demonstrates expertise in aligning technical design with business strategy, managing risks, and optimizing performance. Through disciplined preparation and practical application, candidates can build the confidence and competence required to design modern datacenter environments that support enterprise growth and innovation.

Advanced Datacenter Design Principles

The 3V0-624 certification exam evaluates an architect’s ability to design and integrate VMware solutions that deliver high performance, scalability, and reliability. It requires a strong understanding of vSphere technologies, virtual infrastructure architecture, and business alignment strategies. The goal of a datacenter design is not just to implement technology but to ensure that the solution supports the long-term strategic objectives of the organization. This includes balancing cost efficiency, operational simplicity, and business continuity. The exam focuses heavily on design reasoning, requiring candidates to justify choices made during the architecture process, taking into account constraints, requirements, and risks.

Designing a datacenter solution involves the integration of multiple infrastructure components including compute, storage, networking, and management layers. Each of these elements must work together to provide a stable and flexible environment that supports existing workloads and accommodates future growth. A successful architect understands how these components interact, how they can be optimized, and how to mitigate potential conflicts between performance and availability.

The exam emphasizes a methodology-driven approach where candidates assess the current environment, define design requirements, propose solutions, and validate design choices. This methodology mirrors the real-world process of enterprise datacenter planning and encourages architects to think critically about how design decisions impact operations and maintenance.

Requirements Gathering and Analysis

Gathering accurate requirements is one of the most critical stages of any design project. The architect must communicate effectively with stakeholders to identify functional requirements that define what the system must do and non-functional requirements that specify how the system should perform. For example, uptime expectations, recovery time objectives, and performance targets all form part of non-functional requirements. Each requirement should be measurable and linked directly to business needs to ensure that the final design supports organizational objectives.

The architect must also identify constraints that may limit design flexibility. Constraints can include budget restrictions, legacy hardware, existing licensing models, or mandated security policies. Recognizing these early helps prevent design conflicts and ensures realistic planning. Assumptions should be documented and validated throughout the design process. Unchecked assumptions can introduce risks that compromise reliability or compliance.

Once requirements are gathered, the architect analyzes dependencies and potential conflicts. For instance, a requirement for maximum performance may conflict with cost limitations. The role of the architect is to find a balance that delivers the best possible solution within the defined constraints. Proper documentation at this stage creates a foundation for informed decision-making and ensures transparency during implementation.

Conceptual and Logical Design Alignment

The conceptual design provides a high-level overview of the proposed architecture, defining its primary components and their interactions. It captures the essence of what the infrastructure will achieve without focusing on specific technical details. The logical design translates these concepts into structured elements such as clusters, data centers, and resource pools. It outlines how workloads will be distributed, how virtual networking will be structured, and how storage will be allocated.

In a VMware environment, the logical design might define the use of vCenter Server, distributed switches, and shared storage configurations. It determines logical relationships such as which virtual machines belong to specific resource pools, how they are grouped into clusters, and how workloads are prioritized. Logical design decisions must align with the conceptual design to maintain consistency and fulfill business goals.

For the 3V0-624 exam, candidates must demonstrate the ability to design logical components that meet availability and scalability objectives while remaining compliant with organizational standards. Logical design serves as the blueprint that informs the physical implementation, ensuring each technical component supports the overall strategy.

Physical Design and Implementation Strategy

The physical design phase translates the logical architecture into actual configurations and hardware specifications. This includes selecting specific server models, defining network topologies, and determining storage layout. The architect must decide how resources will be physically connected and how redundancy will be achieved across systems. The physical design should reflect not only performance requirements but also considerations such as power, cooling, and rack space efficiency.

Storage design plays a major role in physical architecture. It must support the desired performance levels and ensure data protection. The choice of storage protocols such as NFS, iSCSI, or Fibre Channel depends on performance expectations and existing infrastructure. Proper design ensures consistent throughput and minimizes latency, which is critical for workloads with high I/O demands.

Networking design in VMware environments requires detailed attention to redundancy, security, and traffic segmentation. Using distributed switches enhances manageability and supports network policies across clusters. Incorporating VLANs and traffic shaping ensures that workloads are isolated and resources are distributed efficiently.

High availability and fault tolerance must be integrated at the hardware and virtualization levels. Redundant power supplies, NIC teaming, and multipath storage configurations all contribute to system resilience. The physical design should support business continuity strategies, enabling seamless recovery from component or site failures.

Risk Assessment and Mitigation Strategies

Risk management is a vital element of datacenter design. Every architectural decision introduces potential risks related to performance, security, cost, or manageability. Identifying and mitigating these risks early prevents costly rework and ensures long-term stability. The architect should categorize risks by their likelihood and impact, then prioritize mitigation strategies accordingly.

For example, dependency on a single vCenter Server instance may represent a management risk. To mitigate this, the architect could implement linked mode or enhanced linked mode to ensure redundancy. Similarly, using a single datastore may create a performance bottleneck, which can be mitigated through storage clustering or tiered storage configurations.

Documenting risks and associated mitigation measures is essential. This documentation allows stakeholders to understand trade-offs and make informed decisions. Regular reviews throughout the project lifecycle help track changes in risk exposure as the environment evolves. The ability to anticipate issues and incorporate preventive measures demonstrates the strategic mindset required for success in the 3V0-624 exam.

Integration, Automation, and Management

In a modern virtualized datacenter, integration and automation are key to achieving operational efficiency. VMware provides a suite of management tools that allow architects to unify monitoring, provisioning, and lifecycle management across the environment. Tools like vRealize Operations and vRealize Automation enable predictive analytics and self-service provisioning, reducing manual workloads and increasing consistency.

Integrating automation into design ensures that repetitive tasks such as virtual machine deployment, patching, and resource scaling are handled efficiently. Automated workflows reduce human error and enforce compliance by applying consistent configuration standards across all systems. Automation also plays a critical role in capacity management by dynamically allocating resources based on demand.

Management integration extends to performance monitoring and alerting. The architect should design a system that provides visibility into health metrics, resource usage, and potential bottlenecks. Centralized management allows administrators to make data-driven decisions that enhance stability and optimize utilization.

Security Architecture and Compliance

Security is a fundamental consideration throughout the datacenter design process. The architect must incorporate defense-in-depth strategies that protect infrastructure layers from unauthorized access and data breaches. VMware environments support several native security controls, including role-based access control, secure boot, and encryption for virtual machines and storage.

Segmentation is another key element of security design. Network micro-segmentation allows administrators to isolate workloads and restrict lateral movement within the environment. This ensures that even if one workload is compromised, others remain protected. Security policies should be consistent and centrally managed to reduce complexity.

Compliance requirements further influence security design. Whether based on internal policies or external standards, compliance frameworks dictate specific controls that must be implemented. Incorporating these controls during the design phase ensures that the environment adheres to necessary regulations without requiring extensive reconfiguration later. Regular audits and automated compliance checks maintain adherence and provide assurance that the datacenter operates securely.

Performance Optimization and Resource Efficiency

Performance optimization ensures that workloads operate efficiently within the designed infrastructure. The architect must understand resource allocation mechanisms in vSphere, including CPU scheduling, memory management, and storage I/O control. By applying these concepts, resources can be distributed dynamically based on workload demands.

Balancing performance with efficiency requires detailed capacity planning. The architect should assess current and projected workload requirements and design clusters that can scale horizontally or vertically as needed. Proper sizing prevents over-provisioning, which wastes resources, or under-provisioning, which degrades performance.

Storage optimization is achieved through technologies like Storage DRS, thin provisioning, and deduplication. These features enhance performance while maximizing resource utilization. Similarly, network optimization techniques such as load balancing and traffic shaping improve data throughput and minimize congestion.

Validation and Testing

Validation confirms that the design meets the defined business and technical requirements. Testing ensures that all systems perform as expected under normal and stressed conditions. The architect should develop a validation plan that includes functional testing, performance benchmarking, and failover simulation.

During validation, each design component should be verified individually and in combination with others. This process identifies configuration errors, compatibility issues, or performance gaps before deployment. For example, validating storage latency under peak loads ensures that applications remain responsive.

Testing should also include recovery scenarios to verify that high availability and disaster recovery mechanisms function correctly. Conducting these tests provides confidence that the datacenter can handle unexpected disruptions without compromising operations.

Documentation and Continuous Improvement

Comprehensive documentation is essential for maintaining design integrity and supporting future operations. It includes architectural diagrams, configuration guides, decision rationales, and operational procedures. This documentation ensures that administrators can understand and maintain the environment long after deployment.

Continuous improvement involves periodic assessments to evaluate performance, capacity, and compliance. As technology evolves, updates may be necessary to maintain efficiency and alignment with business objectives. Architects should adopt a proactive approach to reviewing and refining designs based on feedback and operational data.

Monitoring emerging technologies and incorporating innovations helps the datacenter remain adaptable and future-ready. The ability to evolve while maintaining stability demonstrates the maturity of a well-designed architecture.

The 3V0-624 certification represents a deep mastery of VMware datacenter design principles. It validates the capability to architect complex infrastructures that align with organizational goals, maintain high performance, and ensure resilience. Achieving success in this exam requires not just technical expertise but also analytical thinking and strategic foresight.

A certified professional demonstrates the ability to translate business objectives into scalable, secure, and efficient virtual environments. This expertise contributes to operational excellence, reduced downtime, and optimized resource utilization. Mastery of these design principles enables architects to deliver infrastructures that are robust, flexible, and capable of supporting the evolving demands of enterprise virtualization.

Comprehensive Design Approach for Datacenter Virtualization

The 3V0-624 certification focuses on advanced datacenter virtualization design, requiring professionals to demonstrate their ability to translate business requirements into resilient, efficient, and scalable VMware infrastructures. Success in this exam demands a balance between theoretical understanding and practical design judgment. It evaluates not only how well a candidate knows VMware vSphere components but also how effectively they can integrate these technologies to achieve organizational objectives. The essence of this certification lies in the architect’s ability to construct an environment that is both technically sound and strategically aligned with business outcomes.

Designing a datacenter architecture requires a methodical approach where each component interacts seamlessly with others. The process begins with a deep understanding of business priorities, continues through requirement analysis and conceptual design, and culminates in a fully validated and optimized solution. The exam challenges candidates to apply this full design cycle, requiring decisions that account for performance, scalability, manageability, and cost-effectiveness. Every choice in architecture impacts another layer, whether it involves storage design, network layout, compute resource allocation, or disaster recovery strategy.

Business and Technical Requirement Alignment

The foundation of any successful datacenter design is the accurate identification of business and technical requirements. Business requirements define what the organization expects from the solution, such as improved uptime, faster recovery, or cost reduction. Technical requirements define how these goals will be achieved using VMware technologies and supporting components. Candidates must demonstrate their ability to interpret these requirements and ensure both types align without conflict.

In many environments, requirements can overlap or contradict. For example, a business may require high availability but also impose strict cost constraints. The role of the architect is to find an optimal balance that satisfies critical objectives without exceeding limitations. Stakeholder engagement plays an essential role in this process. Understanding the expectations of executives, IT managers, and system administrators ensures that the solution reflects organizational priorities. Each stakeholder brings a different perspective—executives focus on cost and risk, while administrators emphasize manageability and performance.

During the 3V0-624 exam, candidates encounter scenarios where they must classify information as a requirement, constraint, risk, or assumption. Recognizing the distinctions among these terms helps maintain design clarity. A constraint might restrict design freedom, while a risk introduces uncertainty. The ability to identify and categorize these factors demonstrates strategic thinking and practical understanding of enterprise-level design.

Conceptual Design Development

Once the requirements are defined, the next phase involves developing the conceptual design. This stage represents the high-level vision of the solution without delving into specific configurations. It establishes the architectural intent and outlines how the solution will support business goals. The conceptual design serves as a communication tool for stakeholders, ensuring mutual understanding before technical implementation begins.

For VMware environments, the conceptual design describes major components such as compute clusters, shared storage systems, and virtual networks. It defines relationships between these components and identifies how they contribute to overall goals like performance optimization, fault tolerance, or workload flexibility. This stage allows architects to validate the design direction before moving into more detailed planning.

Conceptual design also introduces key design principles such as modularity and scalability. Modularity ensures that the datacenter can be expanded or modified without major redesign, while scalability ensures that performance can grow alongside workload demands. These principles form the basis for logical and physical design, providing the framework for decisions regarding resource distribution, redundancy, and management integration.

Logical Design and Resource Planning

The logical design phase transforms the conceptual framework into structured architecture, specifying how resources are logically grouped and managed. Logical design determines how compute, network, and storage resources will be abstracted and allocated to meet requirements. In VMware environments, this involves defining clusters, resource pools, distributed switches, and datastores.

One of the primary objectives of logical design is to ensure that resources are used efficiently while maintaining flexibility. For example, the architect may design resource pools to isolate workloads based on priority or department, ensuring that critical applications always receive sufficient CPU and memory resources. Similarly, logical networking design involves creating virtual switches and defining traffic segmentation to improve performance and security.

Availability considerations play a crucial role in logical design. Features such as High Availability and Distributed Resource Scheduler must be configured logically to minimize downtime and balance resource usage across clusters. Logical design should also address fault domain separation to ensure that failures in one area do not impact the entire environment.

The 3V0-624 exam evaluates the candidate’s ability to create logical designs that align with functional and non-functional requirements. The architect must justify why specific configurations were chosen and how they contribute to the stability and scalability of the solution. Logical design decisions should always trace back to the conceptual objectives and business requirements established earlier.

Physical Design and Infrastructure Implementation

The physical design stage converts the logical architecture into specific hardware and configuration details. This includes selecting appropriate server models, defining network topologies, and designing storage layouts that align with performance targets. Physical design ensures that theoretical plans are grounded in practical feasibility.

Compute resource planning involves determining the number of hosts required to support workload demands while providing redundancy. Each host must be configured to meet minimum resource requirements and adhere to standardization policies for easier maintenance. Network design in the physical layer defines the arrangement of physical switches, uplinks, and redundancy paths. Proper segregation of management, vMotion, and storage traffic prevents performance interference and enhances reliability.

Storage design in the physical phase focuses on selecting storage technologies that deliver the desired throughput and latency. It involves defining RAID levels, multipathing configurations, and replication strategies for disaster recovery. These decisions influence both performance and cost efficiency. An effective storage design supports workload demands while maintaining flexibility for future expansion.

Cooling, power distribution, and rack placement are additional considerations that often impact physical layout. An optimized design ensures efficient resource utilization, reduces operational costs, and enhances the sustainability of the datacenter infrastructure.

Risk Management and Mitigation

Every design decision introduces some level of risk. Risk management in the 3V0-624 context involves identifying, assessing, and mitigating factors that could negatively impact performance, availability, or cost. The architect must analyze both technical and operational risks and implement measures to reduce their likelihood and impact.

Risks may arise from hardware failure, software bugs, configuration errors, or even human mistakes. A robust design anticipates these scenarios and incorporates redundancy, monitoring, and failover mechanisms. For example, implementing multiple vCenter Server instances or designing for site-level redundancy minimizes the impact of management server failures. Similarly, using distributed switches and storage replication provides resilience against infrastructure outages.

Documentation of risks and mitigation strategies is crucial. Each identified risk should be assigned a probability and potential impact, along with an action plan to address it. This structured approach ensures accountability and enables continuous monitoring throughout the datacenter’s lifecycle.

Security and Compliance Design

Security forms the foundation of trust and stability in any datacenter environment. In a VMware architecture, security design must address multiple layers, including access control, network segmentation, and data protection. A comprehensive design implements a defense-in-depth strategy that reduces exposure to vulnerabilities and ensures compliance with internal and external requirements.

Access management is typically the first line of defense. Role-based access control ensures that users have only the permissions necessary to perform their responsibilities. Network segmentation through distributed firewalls and micro-segmentation prevents unauthorized lateral movement within the virtual environment. Encryption technologies safeguard both data in transit and data at rest, protecting sensitive information from interception or unauthorized access.

Security must be embedded within every phase of the design rather than treated as a separate component. The architect should evaluate potential attack surfaces, implement least privilege principles, and design for continuous monitoring. Compliance frameworks often require specific configurations, auditing mechanisms, and documentation, all of which should be integrated into the design from the start.

Performance Optimization and Scalability

Performance and scalability are central to datacenter success. The architect must ensure that all design elements work harmoniously to deliver predictable performance even under heavy load. VMware technologies provide multiple tools and mechanisms for optimizing compute, storage, and networking performance.

Performance tuning begins with proper resource sizing. The architect should analyze workload profiles to determine CPU, memory, and storage requirements. Using features like resource reservations and limits helps maintain predictable performance for critical workloads. Distributed Resource Scheduler further enhances efficiency by balancing loads across clusters automatically.

Scalability ensures that the environment can grow as demands increase without requiring major redesign. Horizontal scalability allows for adding more hosts or clusters, while vertical scalability involves increasing resources within existing hardware. Designs should anticipate future needs by including capacity buffers and modular components that can be expanded easily.

Regular performance assessments and capacity planning reviews ensure that the environment continues to meet objectives as workloads evolve. Automation tools can be integrated to monitor performance metrics and adjust configurations dynamically to maintain optimal operation.

Validation, Documentation, and Maintenance

The final phase of the design process involves validating that all components meet the original requirements and function as intended. Validation testing includes performance benchmarking, failover simulations, and system recovery drills. Each component should be tested in isolation and as part of the integrated environment to verify compatibility and reliability.

Documentation is essential for long-term operational success. Detailed records of design decisions, configurations, and dependencies enable efficient troubleshooting and future upgrades. Documentation should include network diagrams, hardware inventories, and policy settings to provide a comprehensive view of the environment.

Ongoing maintenance ensures that the datacenter remains aligned with evolving business and technological requirements. Regular health checks, patch management, and configuration audits maintain security and performance standards. Continuous improvement practices encourage iterative updates to design and operation based on feedback and performance data.

The 3V0-624 exam assesses not only technical proficiency but also the strategic mindset required for designing enterprise-grade datacenter solutions. It validates the architect’s ability to think holistically, balancing innovation with practicality. Every decision in a VMware datacenter design affects performance, reliability, and scalability, making precision and foresight essential.

Achieving mastery in this domain requires deep technical knowledge combined with analytical reasoning. Successful architects understand that a datacenter design is not a static achievement but a dynamic system that must evolve with organizational needs. The principles assessed in this certification—requirement analysis, logical and physical design, risk management, and validation—form the foundation of resilient virtual infrastructures capable of supporting complex enterprise workloads.

Advanced Architectural Principles in Datacenter Virtualization Design

The 3V0-624 certification examines an individual’s ability to design and implement VMware vSphere environments that align with enterprise objectives. It requires not only technical knowledge but also architectural reasoning that translates complex business needs into a resilient and high-performing infrastructure. The exam emphasizes decision-making skills and an understanding of interdependencies across compute, storage, and network components. Each element must integrate into a unified system capable of sustaining operational efficiency and long-term scalability.

Designing a datacenter architecture begins with a systematic approach where each stage contributes to the creation of a well-balanced solution. The architect must assess current infrastructure, define objectives, analyze risks, and propose a design that enhances performance and reliability. Every configuration choice must have a justified purpose and align with both business and technical expectations. The goal is to design a VMware vSphere environment that maximizes resource utilization, minimizes downtime, and delivers predictable service levels.

Requirement Analysis and Design Mapping

Requirement analysis forms the core of every successful datacenter design. It involves identifying and categorizing the various needs of stakeholders and translating them into functional and non-functional requirements. Functional requirements describe what the system should do, such as supporting a defined number of virtual machines or enabling disaster recovery. Non-functional requirements specify how the system should perform, including parameters like latency, response time, or uptime targets.

In the context of the 3V0-624 exam, candidates must demonstrate the ability to differentiate between requirements, constraints, risks, and assumptions. Constraints are limiting factors that influence design flexibility, such as budget restrictions or specific vendor dependencies. Risks represent potential issues that could impact performance, availability, or project timelines. Assumptions are accepted conditions considered true in the absence of complete information. Understanding these distinctions ensures a clear and accurate foundation for the design process.

Mapping requirements to design components is an essential skill. The architect must select technologies and configurations that directly address the defined needs. For instance, if business continuity is a priority, high availability and replication strategies must be included. If scalability is critical, cluster designs must allow for seamless expansion. This analytical mapping ensures every requirement has a technical solution, making the final design both efficient and purpose-driven.

Stakeholder communication is another vital component. Each stakeholder, whether technical or executive, has different expectations from the solution. Effective communication ensures alignment between business priorities and technical implementation. Misalignment often leads to overprovisioning, unnecessary complexity, or missed objectives. Therefore, architects must maintain constant dialogue to validate assumptions and refine requirements throughout the design phase.

Logical and Physical Architecture Integration

The design process transitions from conceptual to logical and finally to physical design. Logical architecture defines how resources are organized within the virtualized environment without focusing on specific hardware. This includes defining clusters, distributed switches, datastores, and virtual machine placement policies. Logical design ensures that the system structure supports scalability, load balancing, and management efficiency.

Physical architecture translates the logical blueprint into real-world implementation. This involves selecting server models, configuring network layouts, and defining storage connectivity. The physical layer ensures that the infrastructure supports redundancy and performance optimization. A well-designed physical architecture minimizes single points of failure and aligns with operational best practices for reliability.

Compute design is one of the most critical aspects of both logical and physical architecture. It determines how processing resources are distributed among workloads. Cluster configuration should balance capacity and redundancy, ensuring adequate performance under failure scenarios. Each host must be designed to accommodate the workloads it supports while allowing for maintenance and failover operations.

Network design focuses on ensuring data flows efficiently and securely across all infrastructure layers. Virtual distributed switches simplify network management, while traffic segregation using VLANs enhances performance and security. Proper network planning ensures that management, storage, and vMotion traffic operate independently, preventing congestion and performance degradation.

Storage design connects directly to both performance and resilience. Selecting appropriate storage protocols, defining multipathing policies, and designing datastore layouts are essential to achieving consistent performance. The architecture must accommodate various workload types, from latency-sensitive applications to large-scale storage demands. Storage redundancy through mirroring or replication ensures business continuity in case of hardware failure.

Resilience, Availability, and Disaster Recovery

High availability and fault tolerance are fundamental to datacenter design. These principles ensure that critical services remain operational even in the event of hardware or software failures. The architect must implement strategies that eliminate single points of failure at every layer. This involves designing redundancy for hosts, networks, and storage systems.

VMware technologies such as vSphere High Availability, Distributed Resource Scheduler, and Fault Tolerance play a vital role in maintaining uptime. High Availability automatically restarts virtual machines on healthy hosts after a failure, while DRS ensures balanced resource allocation. Fault Tolerance goes a step further by providing continuous availability for critical workloads through real-time mirroring. The architect must decide where to apply each feature based on workload importance and resource availability.

Disaster recovery planning extends resilience beyond the datacenter. Replication technologies and site recovery solutions ensure that workloads can be restored in case of a complete site outage. The design should define recovery time objectives and recovery point objectives, ensuring data integrity and minimal service interruption. Network design must support seamless failover between sites, maintaining consistent policies and access controls.

Testing and validating failover mechanisms are as important as implementing them. Regular recovery simulations confirm that the disaster recovery plan functions as intended and that staff are prepared to execute recovery procedures efficiently. Continuous monitoring of replication status and failover readiness further enhances resilience.

Performance Optimization and Scalability Strategy

Performance optimization is integral to maintaining user satisfaction and operational efficiency. In VMware environments, performance depends on proper configuration of compute, storage, and networking layers. The architect must understand how each resource interacts under load and design accordingly to prevent bottlenecks.

Resource management policies allow workloads to perform predictably even in shared environments. Using reservations, limits, and shares ensures that critical applications always receive necessary resources. Distributed Resource Scheduler automates load balancing across clusters, optimizing performance without administrative intervention.

Scalability planning ensures that the environment can grow as business needs evolve. The architecture should support both horizontal and vertical scaling. Horizontal scaling adds more hosts or clusters to the environment, while vertical scaling increases resources within existing servers. The design must account for the operational and financial impact of scaling to avoid overprovisioning or underutilization.

Monitoring tools help maintain optimal performance by providing visibility into resource utilization, latency, and potential issues. Proactive performance management allows architects to adjust configurations before performance degradation occurs. Predictive analytics can anticipate future demand and guide capacity planning efforts.

Workload placement is another critical factor. Understanding the performance characteristics of each workload allows the architect to assign appropriate resources. Workloads that generate high input/output operations per second require low-latency storage solutions, while compute-intensive workloads benefit from CPU optimization. By categorizing and isolating workloads, the architect can prevent resource contention and ensure consistent performance.

Security and Governance in Design

Security and governance are essential pillars of datacenter design. The architecture must protect data, applications, and infrastructure from unauthorized access while maintaining compliance with organizational policies. Security must be integrated into the design from the initial planning stage rather than added as an afterthought.

Access control mechanisms regulate who can perform specific actions within the environment. Role-based access control ensures that users only have permissions necessary for their tasks. Centralized authentication integration enhances security by providing consistent policy enforcement.

Network security within a virtualized datacenter involves segmentation and isolation. Micro-segmentation enables fine-grained control over traffic between virtual machines, preventing lateral movement in the event of a breach. Firewalls and intrusion detection systems strengthen defenses by monitoring and controlling network flows.

Data protection strategies safeguard information both in transit and at rest. Encryption should be applied wherever sensitive data is stored or transmitted. Regular backups and replication ensure that data can be recovered quickly in case of corruption or loss. The architect must design storage and network configurations that support encryption without degrading performance.

Governance ensures that operational practices remain consistent and compliant with standards. Automated policies can enforce configuration baselines, ensuring that all virtual machines adhere to approved settings. Auditing and reporting tools track changes, providing accountability and supporting internal and external audits.

Validation, Documentation, and Continuous Improvement

Validation is the process of ensuring that the design meets all specified requirements and functions as intended. It involves testing performance, availability, and fault tolerance under various scenarios. Validation identifies weaknesses early, reducing the risk of deployment issues.

Comprehensive documentation provides transparency and supports ongoing operations. It should include architectural diagrams, configuration details, and operational guidelines. Documentation helps administrators understand design intent and ensures consistency during maintenance or expansion activities.

Continuous improvement ensures that the datacenter design evolves alongside technological advancements and organizational growth. Regular assessments help identify areas for enhancement, such as adopting automation tools or refining monitoring systems. Lessons learned from operations and incidents feed back into the design process, creating a cycle of refinement and optimization.

Knowledge sharing within the organization ensures that design best practices are consistently applied across projects. Collaboration between architects, administrators, and management promotes alignment between operational execution and strategic objectives.

The 3V0-624 certification represents advanced expertise in datacenter virtualization design and architecture. It validates an architect’s ability to combine technical skill with analytical reasoning to deliver scalable, secure, and efficient solutions. Success in this certification requires deep understanding of VMware vSphere components, design methodologies, and the relationship between technology and business value.

A well-designed datacenter is more than a collection of virtual machines; it is a cohesive system engineered for reliability, flexibility, and performance. Architects who master the principles evaluated in this exam are capable of creating infrastructures that adapt to change, maintain stability, and support strategic growth. The ability to balance innovation with practicality distinguishes effective architects, ensuring that the datacenter remains a robust foundation for organizational success.

Core Principles of VMware Datacenter Virtualization Design

The 3V0-624 certification evaluates a candidate’s ability to design and architect VMware vSphere environments that align with organizational goals, technical requirements, and long-term scalability. This exam focuses heavily on design reasoning, decision justification, and the ability to translate complex business demands into a structured and reliable virtualized infrastructure. The design process in datacenter virtualization is not merely about deploying technology but about aligning architecture with strategy, performance, and operational efficiency.

To succeed in this exam, candidates must understand how to evaluate and design all layers of a vSphere-based datacenter. These layers include compute, storage, networking, management, and security. Each element contributes to an overall architecture that ensures performance, scalability, and resilience. The architect’s role is to develop a holistic solution where every configuration choice supports the larger objective of maintaining uptime, improving manageability, and delivering consistent performance across workloads.

Design begins by assessing current infrastructure capabilities and mapping them against future business needs. Architects must determine how to modernize systems, optimize hardware utilization, and minimize operational risks while ensuring compatibility and sustainability. The focus is on building an adaptable infrastructure that remains efficient under changing workloads and organizational growth.

Design Methodology and Conceptual Planning

Designing a datacenter starts with a systematic methodology that guides the architect from conceptual to physical implementation. The conceptual phase focuses on understanding the organization’s vision, challenges, and service delivery expectations. This phase defines the problem space and establishes a foundation for subsequent decisions.

Requirement gathering plays a vital role in this stage. It includes functional requirements, which specify what the system should deliver, and non-functional requirements, which define how the system should perform. For example, a functional requirement may call for supporting a specific number of virtual machines, while a non-functional one might dictate latency thresholds or recovery times.

The architect must also identify constraints, assumptions, and risks. Constraints could include hardware limitations, regulatory standards, or budget restrictions. Assumptions refer to factors believed to be true but unverified, such as network latency expectations or vendor delivery schedules. Risks are potential events that could affect the design’s success, such as compatibility issues or capacity bottlenecks. Managing these aspects early in the design ensures stability during implementation.

During conceptual planning, architects must align the virtual infrastructure design with business priorities. The objective is to ensure that the proposed solution supports key initiatives such as digital transformation, automation, or workload modernization. Each design decision should deliver measurable business value through cost efficiency, performance improvement, or reduced management complexity.

Logical Design Framework and Intercomponent Relationships

Logical design translates conceptual ideas into an organized architecture that defines how resources will interact in the virtual environment. This layer focuses on how virtual machines, clusters, networks, and datastores are structured to deliver the required services. Logical design abstracts physical hardware and focuses on relationships, dependencies, and system behavior.

Compute design at the logical level defines cluster configurations, host sizing, and virtual machine distribution strategies. Clusters must be configured for load balancing, fault tolerance, and scalability. The architect should ensure that the cluster capacity allows for maintenance and failover without performance degradation.

Networking design establishes logical connections between system components. Virtual distributed switches, port groups, and network segmentation policies are defined at this stage. Segregating traffic types—management, storage, vMotion, and virtual machine traffic—prevents interference and ensures optimal throughput.

Storage design defines how data is provisioned and accessed. It involves creating datastore clusters, selecting storage protocols, and defining policies for replication and redundancy. The logical storage design ensures that performance and capacity align with workload requirements and that the architecture supports scalability without data migration disruptions.

Management design covers how the environment will be monitored, configured, and maintained. The architect must determine which management tools will be used for automation, alerting, and performance tracking. Efficient management design ensures operational consistency and reduces administrative overhead.

Physical Architecture and Infrastructure Implementation

The physical design translates the logical architecture into actual infrastructure specifications. It defines server models, network topology, storage arrays, and cabling structures. The goal is to ensure that the physical environment supports the logical configuration while maintaining redundancy, performance, and manageability.

Compute resources form the foundation of the physical layer. The architect must determine the appropriate server hardware, processor configurations, and memory capacity. Host designs should balance performance and cost efficiency, while maintaining headroom for future expansion.

Storage systems must be selected based on workload types and performance requirements. Tiered storage can be implemented to optimize cost and performance by aligning different storage types with varying workloads. Redundancy mechanisms, such as RAID configurations and multipathing, ensure data protection and availability.

The physical network design defines switch configurations, VLAN assignments, and interconnects between systems. Redundant connections and link aggregation prevent downtime caused by single link failures. Properly segmented and optimized networks also enhance security and streamline data flow across the environment.

Physical security should also be integrated into the design. Access control to hardware, proper rack organization, and environmental controls contribute to the reliability and safety of the datacenter. Each component must be physically protected and maintained according to operational best practices.

Resource Management and Performance Optimization

In VMware environments, resource management ensures efficient allocation of compute, storage, and networking capabilities across virtual workloads. This requires balancing performance with availability to prevent contention and maintain service levels.

Resource pools allow logical segmentation of compute resources, ensuring that critical workloads always have access to necessary CPU and memory. Proper configuration of reservations, limits, and shares helps prioritize resource allocation. Resource management strategies must also consider peak demand periods and failover scenarios to avoid resource starvation.

Performance optimization requires continuous assessment of workloads and system health. Monitoring tools provide visibility into key metrics such as CPU utilization, storage latency, and network throughput. Proactive analysis helps identify bottlenecks before they affect performance. The architect must establish baseline performance metrics during validation to compare future operational data.

Workload placement is another performance consideration. Virtual machines should be placed based on their performance characteristics and dependencies. Workloads with high input/output demands should be assigned to low-latency storage, while compute-intensive workloads benefit from CPU pinning and memory optimization.

Scalability strategies ensure that performance remains consistent as the environment grows. The design must accommodate both vertical scaling, which increases resources within existing systems, and horizontal scaling, which adds more nodes to the infrastructure. This dual approach provides flexibility for long-term growth and adaptation.

High Availability and Fault Tolerance

Resilience is one of the core design objectives in datacenter architecture. High availability mechanisms protect against unplanned outages by automatically recovering workloads when failures occur. Fault tolerance extends this by maintaining continuous workload availability without interruption.

VMware vSphere High Availability detects host or virtual machine failures and restarts affected workloads on healthy hosts. Distributed Resource Scheduler works alongside it to rebalance workloads across the cluster. This ensures that performance remains stable even after failover events.

Fault Tolerance provides an additional layer of protection for critical workloads. It mirrors a virtual machine in real time, maintaining a live shadow copy that instantly takes over if the primary instance fails. Although resource-intensive, this feature ensures zero downtime for essential applications.

Disaster recovery extends fault tolerance across sites. Replication solutions enable data synchronization between primary and secondary datacenters. The recovery site must be designed with sufficient capacity and connectivity to restore services promptly. Recovery procedures must be clearly defined, tested regularly, and integrated into the overall operational plan.

Security and Compliance Considerations

Security must be embedded within the design architecture to protect both infrastructure and data. It involves multiple layers, including access control, network security, data protection, and compliance enforcement.

Access control is achieved through role-based management. By assigning permissions based on job functions, the environment minimizes risks from unauthorized activities. Integration with centralized identity services ensures consistent policy application across all systems.

Network segmentation enhances security by isolating workloads. Micro-segmentation policies define traffic rules between virtual machines, reducing lateral attack potential. Distributed firewalls and security groups provide granular control over communication patterns.

Data security strategies include encryption of storage and network traffic, ensuring that sensitive information remains protected throughout its lifecycle. Backup and replication solutions must also use secure channels to prevent interception.

Compliance governance ensures that all security configurations align with organizational and regulatory standards. Regular audits and automated configuration checks maintain adherence to policies. Architects must design systems that make compliance a continuous process rather than a periodic task.

Documentation, Validation, and Design Handover

Validation ensures that the design functions as expected and meets all defined requirements. Testing should include performance benchmarking, failover simulations, and disaster recovery exercises. Validation provides assurance that the environment can handle real-world demands.

Comprehensive documentation supports both validation and long-term operations. It should include diagrams, configuration details, design decisions, and maintenance guidelines. Clear documentation ensures that administrators and engineers can manage and troubleshoot the environment effectively.

Design handover is the final stage of the process. It transfers responsibility from the architect to the operations team. The handover must include detailed operational procedures, escalation paths, and change management processes. Proper handover ensures that the infrastructure continues to function according to design intent and supports ongoing business operations seamlessly.

Continuous Optimization and Design Evolution

Datacenter design is not static; it evolves with changing workloads, technology advancements, and business strategies. Continuous optimization ensures that the infrastructure remains efficient, secure, and aligned with organizational goals.

Regular performance reviews identify areas for improvement, such as resource utilization inefficiencies or outdated configurations. Automation tools can be introduced to streamline management and reduce human error. Predictive analytics enable proactive adjustments before performance or capacity issues occur.

Adopting emerging technologies should be part of the long-term strategy. This could include integrating cloud services, adopting software-defined storage, or enhancing automation capabilities. Every enhancement should be validated against existing design principles to ensure consistency and reliability.

Knowledge sharing within teams promotes design maturity. Documenting lessons learned from previous projects and operational incidents helps improve future architectures. Continuous collaboration between architects, engineers, and management ensures that the datacenter design remains dynamic and resilient.

The 3V0-624 certification represents mastery of VMware datacenter design and architecture. It validates an individual’s ability to create complex virtual infrastructures that balance performance, security, and scalability. The exam measures how well candidates can interpret business needs and translate them into efficient and sustainable VMware solutions.

A well-crafted datacenter design is built upon deep technical expertise and strategic insight. Architects who excel in this domain not only deliver high-performing environments but also ensure adaptability for future requirements. Achieving this certification demonstrates advanced proficiency in developing solutions that enhance reliability, operational consistency, and long-term value. Through thoughtful design, VMware professionals can create datacenters that empower organizations to innovate confidently and operate efficiently in a dynamic digital landscape.

Advanced Datacenter Design Concepts and Architecture Strategy

The 3V0-624 certification focuses on mastering the process of designing a scalable, resilient, and efficient VMware vSphere datacenter infrastructure. The emphasis is not only on implementing technology but also on designing an environment that supports organizational objectives and operational continuity. This certification evaluates the architect’s ability to translate business and technical requirements into a structured virtualization design that aligns with industry best practices. The design process involves analyzing requirements, defining the conceptual model, developing logical and physical designs, validating assumptions, and ensuring that every design decision supports functionality, availability, and performance.

A datacenter design begins with understanding the purpose and outcomes expected from the virtualized infrastructure. The architect must evaluate both current operational challenges and anticipated future demands. A design should enable flexibility and adaptability, allowing the infrastructure to evolve as workloads grow or change. The 3V0-624 exam expects candidates to demonstrate not only technical skill but also design reasoning, including why one solution might be more suitable than another under certain conditions.

Every design decision involves trade-offs between cost, complexity, manageability, and performance. The architect’s role is to balance these factors strategically to deliver a solution that meets requirements without overengineering or introducing unnecessary risks. This certification focuses heavily on understanding the reasoning behind configuration choices, as each decision affects scalability, fault tolerance, and lifecycle maintenance.

Requirements Gathering and Design Framework

Successful datacenter design begins with structured requirement gathering. The process captures business objectives, functional and non-functional requirements, and identifies constraints, assumptions, and risks. Functional requirements specify what the system must achieve, such as supporting a specific number of workloads or providing high availability. Non-functional requirements describe how the system performs, covering aspects like response times, scalability, or recovery objectives.

Identifying constraints early is critical because they define the boundaries within which the design must operate. Constraints may include hardware limitations, budgetary restrictions, existing vendor agreements, or internal policies. Assumptions, on the other hand, are expectations considered true for the purpose of design but must later be validated. Risks represent potential events that could disrupt the design’s effectiveness, and mitigation strategies must be planned accordingly.

Stakeholder analysis forms another critical part of this framework. Each stakeholder has distinct goals that must be reflected in the design. Executives prioritize business continuity, cost efficiency, and compliance, while technical teams focus on performance, scalability, and operational simplicity. Balancing these perspectives ensures that the final architecture satisfies both strategic and operational goals.

The requirement phase should also involve capacity planning and future forecasting. Predicting growth patterns and workload demands allows architects to design scalable systems that accommodate expansion without major redesigns. This proactive planning prevents capacity shortages and maintains consistent performance as the environment evolves.

Logical Architecture and System Structuring

The logical design represents the blueprint of how system components will interact. It defines compute clusters, storage configurations, and network segmentation, translating conceptual ideas into an organized virtual infrastructure. Logical design abstracts the underlying hardware and focuses on defining relationships, dependencies, and high-level configurations that ensure performance, security, and manageability.

For compute resources, the logical design outlines how hosts and clusters will be configured, how workloads will be distributed, and how failover scenarios will be managed. Cluster designs must include policies for load balancing, high availability, and resource distribution. The architecture must account for maintenance events while ensuring minimal disruption to workloads.

The networking component of the logical design establishes communication patterns between virtual machines, management systems, and storage resources. Proper segmentation using distributed switches and network policies ensures traffic isolation and optimal throughput. Network designs should also consider redundancy and quality of service to maintain performance consistency under varying load conditions.

Storage design defines how data will be provisioned and managed across datastores. The logical storage layer should align with workload performance requirements, providing a mix of capacity and speed optimized for different applications. Policies such as replication, deduplication, and encryption must be incorporated to ensure data integrity and availability.

Logical management design focuses on visibility, automation, and control. This includes defining monitoring strategies, alert mechanisms, and performance baselines. Centralized management enables efficient configuration, proactive troubleshooting, and consistent policy enforcement across the environment.

Physical Design and Implementation

The physical design is the stage where logical components are mapped to tangible infrastructure. It specifies the hardware models, network connections, storage architecture, and overall topology of the datacenter. The physical design ensures that the chosen hardware supports the logical configuration and meets performance and availability targets.

Server hardware selection must consider processing capacity, memory configurations, and energy efficiency. Physical host design should support clustering, high availability, and future scalability. Sizing decisions are influenced by workload density, redundancy requirements, and operational budgets.

The physical storage design determines which storage systems will be deployed and how they integrate with compute and network layers. It includes considerations for connectivity, redundancy, and data protection mechanisms. Proper tiering ensures that critical workloads access high-performance storage, while less critical workloads can use cost-efficient tiers.

Physical network topology should provide both performance and fault tolerance. Redundant paths, VLAN configurations, and link aggregation improve availability and reduce the risk of network bottlenecks. The design must ensure separation of management, storage, and user traffic to maintain security and consistent data flow.

Cooling, power management, and physical access control are additional elements of the design. Reliable datacenter operation requires stable environmental conditions, efficient energy use, and secure access to physical infrastructure. The design must also consider future capacity expansion, ensuring space and power resources are available for hardware growth.

Design for Availability and Reliability

High availability and fault tolerance form the backbone of datacenter reliability. The design must ensure that hardware or software failures do not interrupt business operations. High availability mechanisms automatically detect failures and recover workloads on healthy systems, reducing downtime.

Compute availability can be achieved through clustering and failover strategies. Virtual machines should be distributed across multiple hosts to avoid single points of failure. Distributed Resource Scheduler ensures balanced resource utilization, maintaining stability even during failover events.

Storage reliability is ensured through redundancy and replication. RAID configurations, multipathing, and snapshot technologies prevent data loss and maintain access continuity. Replication between datacenters provides disaster recovery capability, ensuring that data remains available even during site failures.

Network availability depends on redundant links, switch pairs, and load balancing. Failover paths must be predefined to automatically reroute traffic if a connection fails. The design must ensure that all critical services remain reachable even when a network component becomes unavailable.

Reliability also involves continuous monitoring. Proactive health checks and alerting mechanisms enable early detection of potential failures. Regular testing of recovery processes ensures that failover mechanisms function correctly when needed.

Performance Management and Resource Optimization

Efficient performance management requires a balance between resource availability and workload demand. Proper capacity planning ensures that workloads have sufficient compute, memory, and storage resources without over-provisioning. The architect must anticipate both steady-state and peak workload conditions.

Resource pools are used to manage allocation and prioritization. Critical applications receive guaranteed resources through reservations, while limits prevent excessive resource consumption by non-critical workloads. This approach maintains consistent performance across varying demands.

Monitoring tools provide insights into system performance, identifying bottlenecks and inefficiencies. Analysis of metrics such as CPU readiness, memory contention, and storage latency helps in optimizing performance. Performance tuning should be an ongoing process based on real-time data and predictive modeling.

Automation plays a significant role in optimization. Workload placement, scaling, and balancing can be dynamically managed to maintain efficiency. Automated policies reduce manual intervention and enhance response time to performance fluctuations.

Scalability must be a fundamental design consideration. Both vertical and horizontal scaling strategies should be implemented. Vertical scaling involves adding resources to existing systems, while horizontal scaling expands capacity by adding new hosts or clusters. Designing for modular expansion ensures long-term flexibility.

Security and Risk Mitigation

Security in the design stage ensures that the datacenter infrastructure protects data, workloads, and management systems from internal and external threats. This involves layered defense across compute, storage, and network components.

Access control begins with proper role-based permissions. Administrators should have access only to systems relevant to their responsibilities. Centralized authentication integrates user management and policy enforcement, ensuring consistent security practices.

Network segmentation isolates workloads and prevents lateral attacks. Micro-segmentation policies define communication boundaries, while distributed firewalls control traffic flow at the virtual machine level. Secure network configurations must include encrypted management channels and secure protocols for data transfer.